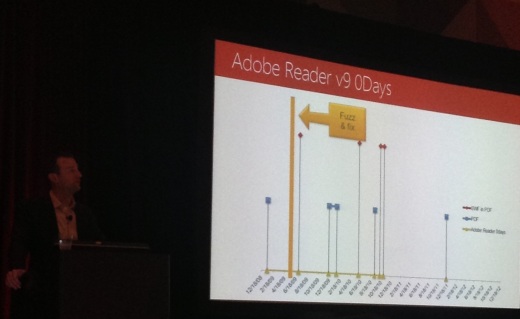

With the ongoing onslaught of high profile security breaches, it’s fast become a no-brainer that we should use different passwords for each service we subscribe to, and that they should be long and complex. Unfortunately, not everyone may be able to remember something like ‘ZG9uJ3QgZG8gdGhpcw==’, so many services have implemented Multi Factor Authentication to help better defend users.

Password based authentication has long since remained the most popular way of logging in to common services. It’s something a user knows. Multi-Factor Authentication serves to add various identifying factors to make it harder for attackers to access accounts that aren’t theirs. Other examples of factors we can add include something a user has (cryptographic token, phone) or something a user is (features, such as biometrics).

Two Factors are better than one

Many common services today provide the option of enabling Two Factor Authentication (2FA). This uses either a time-based code generated by an Authenticator app (such as Google Authenticator, or Facebook’s Code Generator), or SMS text messages sent to a mobile device. The two factors in question here are something one knows (password) and something one has (phone).

2FA also can be used to provide a way to find out if an account is currently being targeted, since if the password is entered, some services can send users an SMS code to facilitate login. Without this code, a malicious actor couldn’t enter the system, but the user would be made aware of the login attempt and could proceed to change their password.

How it works

After enabling 2FA, when logging in to services online, users are shown the typical password prompt and an additional step is shown on successful entry. Users are prompted for a short code generated either by a corresponding authenticator app or received via SMS. Some systems which are not web-based, such as mobile apps, may not support 2FA login. For these cases, users can generate application-specific passwords. Browsers can also be remembered to prevent the need for entering a code on every login.

This type of verification is commonplace in the banking industry, and we are already somewhat accustomed to entering a security code generated by a separate device when carrying out transactions. Now, this additional layer of security can be turned on in many more places.

To help reduce the chances of that Twitter account someday sending spam to its followers, that Facebook profile being defaced with questionable content for all to see, and to make those files on Dropbox safer from prying eyes, consider turning on 2FA for the following systems:

1) Visit https://www.facebook.com/settings

2) Navigate to the Security tab

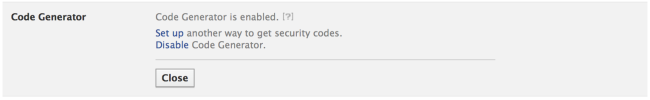

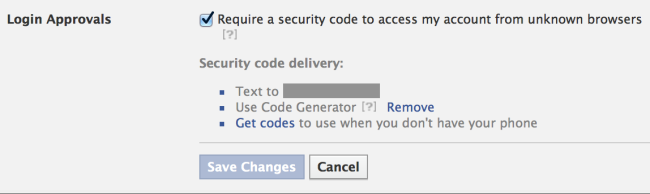

3) Enable the Code Generator (and SMS codes if desired)

4) Enable login approvals

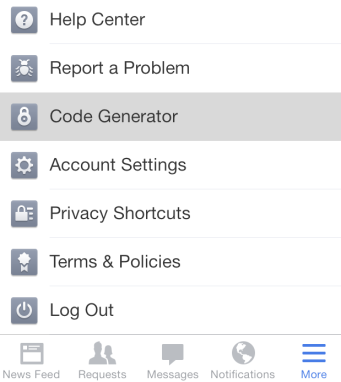

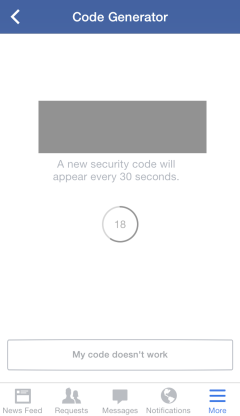

The mobile app will now have an option to invoke the Code Generator, as shown below on iOS:

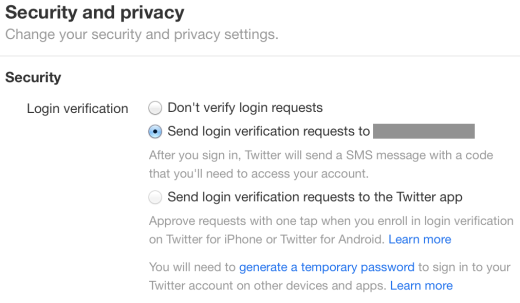

1) Navigate to https://twitter.com/settings/security

2) Opt-in to send login verification requests either via SMS or the app

3) Follow the instructions to generate a temporary password for other apps if necessary

4) While we’re here, click the option to “require personal information to reset my password too”

5) If so inclined, uncheck the option to “tailor ads based on information shared by ad partners”

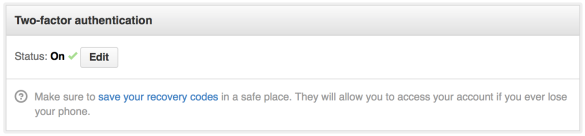

1) Visit https://www.dropbox.com/account/security

2) Enable two-step verification

1) Sign in to Google Account at www.google.com

2) Follow the instructions at http://accounts.google.com/SmsAuthConfig

3) Optionally download the Google Authenticator mobile app

Microsoft two-step verification

1) Sign in at https://login.live.com

2) Navigate to https://account.live.com/Proofs/Manage

3) Enable two-step verification

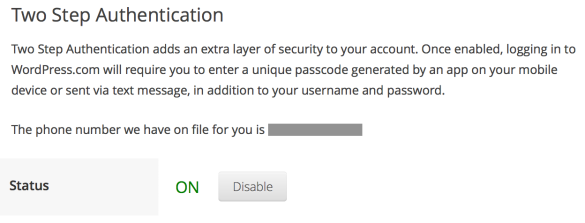

WordPress Two Step Authentication

1) Sign in to WordPress and navigate to https://wordpress.com/settings/security/

2) Enable the option for Two Step Authentication for SMS/Authenticator

Github Two-Factor Authentication

1) Sign in to Github and visit https://github.com/settings/admin

2) Enable the option for Two-Factor Authentication

LinkedIn Two-Step Verification

1) Navigate to https://www.linkedin.com/settings/

2) Select the option to manage security settings

3) Click turn on two-step verification

2FA all the services

A range of other websites also support 2FA, including PayPal, Steam and Amazon Web Services – so this is certainly not an exhaustive list.

Be sure to take note of the backup codes provided by any service for which 2FA is enabled, and store them in a safe place. It is also worth mentioning that the Authenticator mobile app has had problems in the past which resulted in users unable to generate codes, so always keep backups and/or the SMS option available.

For those who are interested in locking down their security further, take note that enabling 2FA in such a way adds access to the device providing the codes as a potential attack vector. Consider turning off SMS message previews during the lock screen in mobile devices, so that the codes aren’t freely displayed to anyone who happens to glance over.

With users’ attitudes beginning to shift in favour of authentication mechanisms that look beyond passwords, one can hope the rate at which accounts are ‘hacked’ can slow down. Above all, the first point of entry remains the password, so keep them strong, complex and dissimilar to dictionary words. If possible, use a passphrase.

Alex Kara